First Day

Introduction to Linux containers.

What are containers?

A Container can be seen as a minimal virtual environment that can be used in any Linux-compatible machine (and beyond).

Using containers is time- and resource-saving as they allow:

Controlling for software installation and dependencies.

Reproducibility of the analysis.

Containers allow us to use exactly the same versions of the tools.

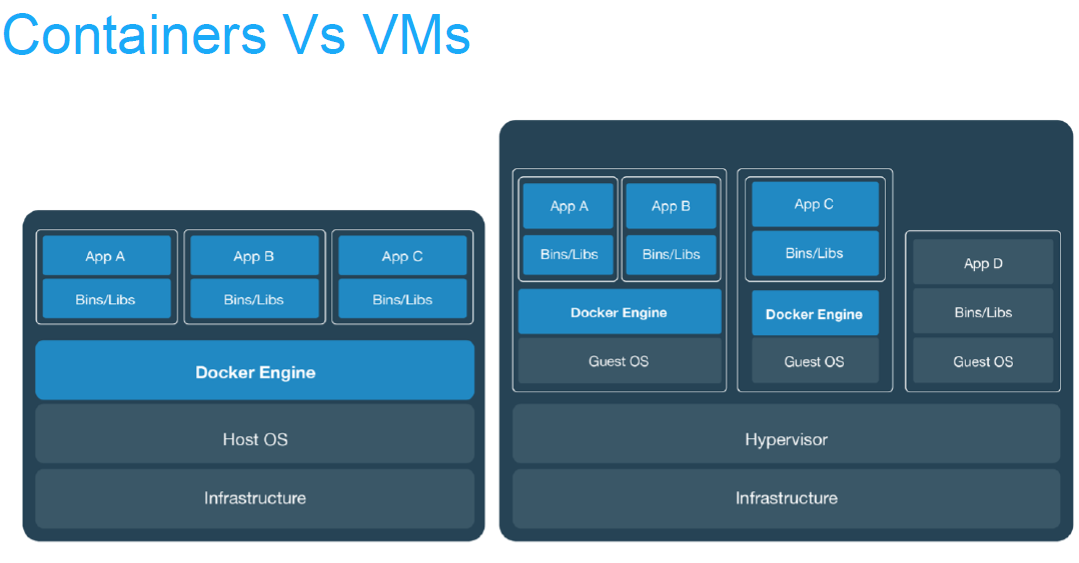

Virtual machines or containers ?

Virtualisation |

Containerisation (aka lightweight virtualisation) |

|---|---|

Abstraction of physical hardware |

Abstraction of application layer |

Depends on hypervisor (software) |

Depends on host kernel (OS) |

Do not confuse with hardware emulator |

Application and dependencies bundled all together |

Enable virtual machines |

Every virtual machine with an OS (Operating System) |

Virtualisation

Abstraction of physical hardware

Depends on hypervisor (software)

Do not confuse with hardware emulator

- Enable virtual machines:

Every virtual machine with an OS (Operating System)

Containerisation (aka lightweight virtualisation)

Abstraction of application layer

Depends on host kernel (OS)

Application and dependencies bundled all together

Virtual machines vs containers

Pros and cons

ADV |

Virtualisation |

Containerisation |

|---|---|---|

PROS. |

|

|

CONS. |

|

|

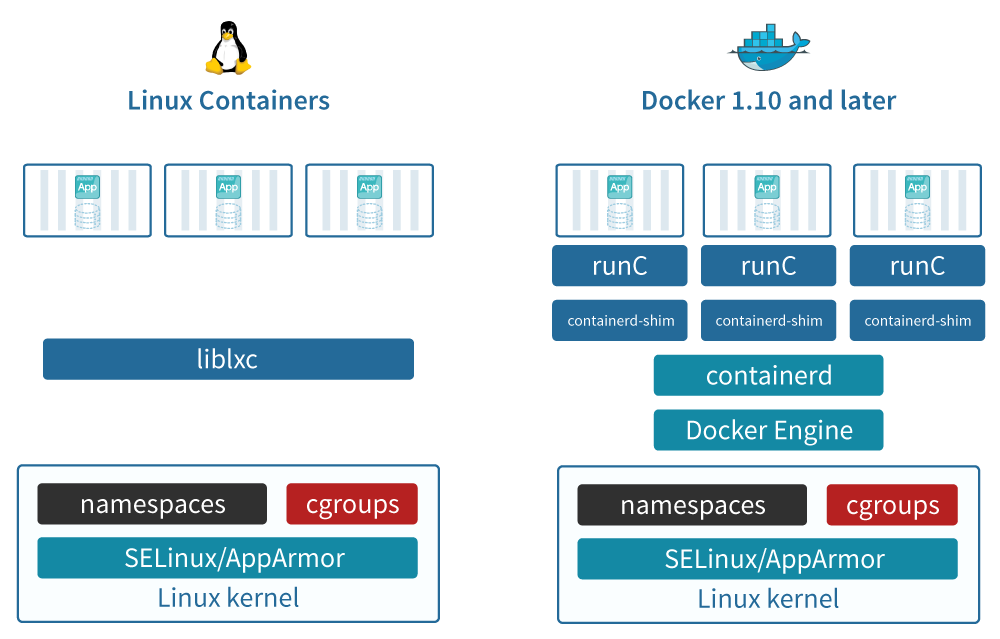

History of containers

chroot

chroot jail (BSD jail): first concept in 1979

Notable use in SSH and FTP servers

Honeypot, recovery of systems, etc.

Additions in Linux kernel

First version: 2008

- cgroups (control groups), before “process containers”

isolate resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes

- Linux namespaces

one set of kernel resources restrict to one set of processes

Introduction to Docker

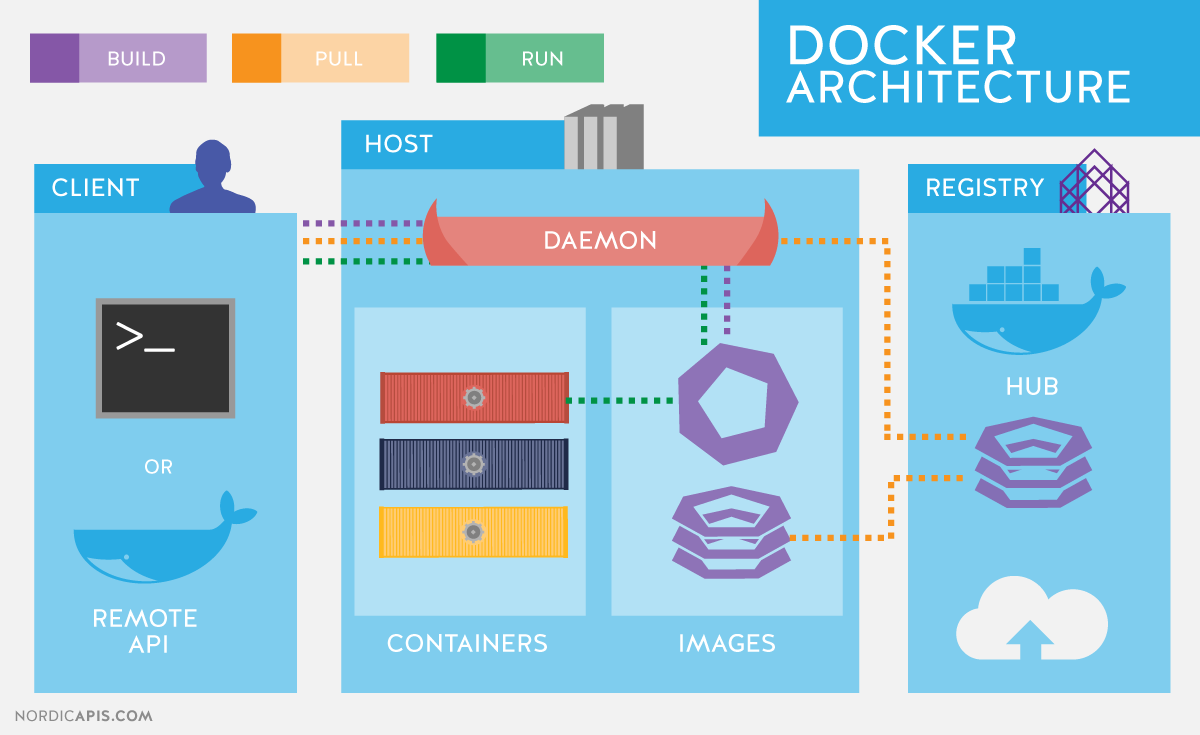

What is Docker?

Platform for developing, shipping and running applications.

Infrastructure as application / code.

First version: 2013.

Company: originally dotCloud (2010), later named Docker.

Established [Open Container Initiative](https://www.opencontainers.org/).

As a software:

Docker Enterprise Edition.

There is an increasing number of alternative container technologies and providers. Many of them are actually based on software components originally from the Docker stack and they normally try to address some specific use cases or weakpoints. As a example, Singularity, that we introduce later in this couse, is focused in HPC environments. Another case, Podman, keeps a high functional compatibility with Docker but with a different focus on technology (not keeping a daemon) and permissions.

Docker components

Read-only templates.

Containers are run from them.

Images are not run.

Images have several layers.

Images versus containers

Image: A set of layers, read-only templates, inert.

An instance of an image is called a container.

When you start an image, you have a running container of this image. You can have many running containers of the same image.

“The image is the recipe, the container is the cake; you can make as many cakes as you like with a given recipe.”

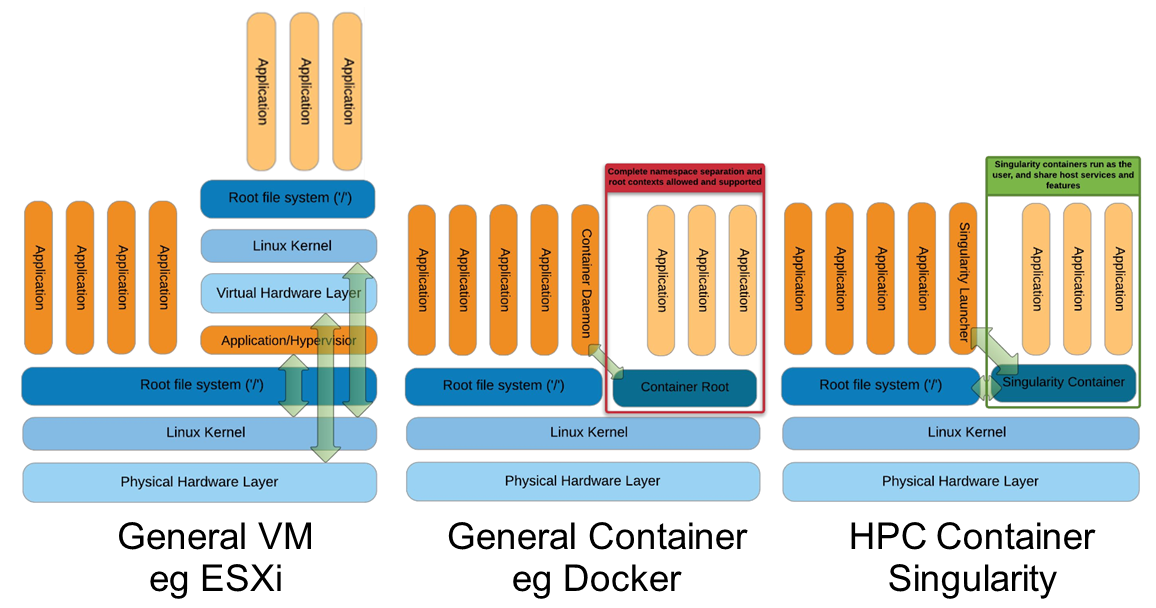

Introduction to Singularity

- Focus:

Reproducibility to scientific computing and the high-performance computing (HPC) world.

Origin: Lawrence Berkeley National Laboratory. Later spin-off: Sylabs

Version 1.0 -> 2016

More information: https://en.wikipedia.org/wiki/Singularity_(software)

Singularity architecture

Strengths |

Weaknesses |

|---|---|

No dependency of a daemon |

At the time of writing only good support in Linux |

Can be run as a simple user |

Mac experimental. Desktop edition. Only running |

Avoids permission headaches and hacks |

For some features you need root account (or sudo) |

Image/container is a file (or directory) |

|

More easily portable |

|

Two types of images: Read-only (production) |

|

Writable (development, via sandbox) |

Trivia

Nowadays, there may be some confusion since there are two projects:

They “forked” not long ago. So far they share most of the codebase, but eventually this might be different, and software might have different functionality.

Container registries

Container images, normally different versions of them, are stored in container repositories.

These repositories can be browser or discovered within, normally public, container registries.

Docker Hub

It is the first and most popular public container registry (which provides also private repositories).

Example:

https://hub.docker.com/r/biocontainers/fastqc

singularity build fastqc-0.11.9_cv7.sif docker://biocontainers/fastqc:v0.11.9_cv7

Biocontainers

Website gathering Bioinformatics focused container images from different registries.

Originally Docker Hub was used, but now other registries are preferred.

Example: https://biocontainers.pro/tools/fastqc

Via quay.io

https://quay.io/repository/biocontainers/fastqc

singularity build fastqc-0.11.9.sif docker://quay.io/biocontainers/fastqc:0.11.9--0

Via Galaxy project prebuilt images

singularity pull --name fastqc-0.11.9.sif https://depot.galaxyproject.org/singularity/fastqc:0.11.9--0

Galaxy project provides all Bioinformatics software from the BioContainers initiative as Singularity prebuilt images. If download and conversion time of images is an issue, this might be the best option for those working in the biomedical field.

Running and executing containers

Once we have some image files (or directories) ready, we can run processes.

Singularity shell

The straight-forward exploratory approach is equivalent to docker run -ti biocontainers/fastqc:v0.11.9_cv7 /bin/shell but with a more handy syntax.

singularity shell fastqc-0.11.9.sif

Move around the directories and notice how the isolation approach is different in comparison to Docker. You can access most of the host filesystem.

Singularity exec

That is the most common way to execute Singularity (equivalent to docker exec). That would be the normal approach in a HPC environment.

singularity exec fastqc-0.11.9.sif fastqc

Test a processing of a file from testdata directory:

singularity exec fastqc-0.11.9_cv7.sif fastqc B7_input_s_chr19.fastq.gz

Singularity run

This executes runscript from recipe definition (equivalent to docker run). Not so common for HPC uses. More common for instances (servers).

singularity run fastqc-0.11.9.sif

Environment control

By default Singularity inherits a profile environment (e.g., PATH environment variable). This may be convenient in some circumstances, but it can also lead to unexpected problems when your own environment clashes with the default one from the image.

singularity shell -e fastqc-0.11.9.sif

singularity exec -e fastqc-0.11.9.sif fastqc

singularity run -e fastqc-0.11.9.sif

Compare env command with and without -e modifier.

singularity exec fastqc-0.11.9.sif env

singularity exec -e fastqc-0.11.9.sif env

Exercise

- Generate a Singularity image of the last samtools version

Consider and compare different registry sources

Explore the inside contents of the image

Execute in different ways

samtoolsprogram (e. g., using fqidx option)

Introduction to Nextflow

DSL for data-driven computational pipelines. www.nextflow.io.

What is Nextflow?

Nextflow is a domain specific language (DSL) for workflow orchestration that stems from Groovy. It enables scalable and reproducible workflows using software containers. It was developed at the CRG in the Lab of Cedric Notredame by Paolo Di Tommaso. The Nextflow documentation is available here and you can ask help to the community using their gitter channel

In 2020, Nextflow has been upgraded from DSL1 version to DSL2. In this course we will use exclusively DSL2.

What is Nextflow for?

It is for making pipelines without caring about parallelization, dependencies, intermediate file names, data structures, handling exceptions, resuming executions, etc.

It was published in Nature Biotechnology in 2017.

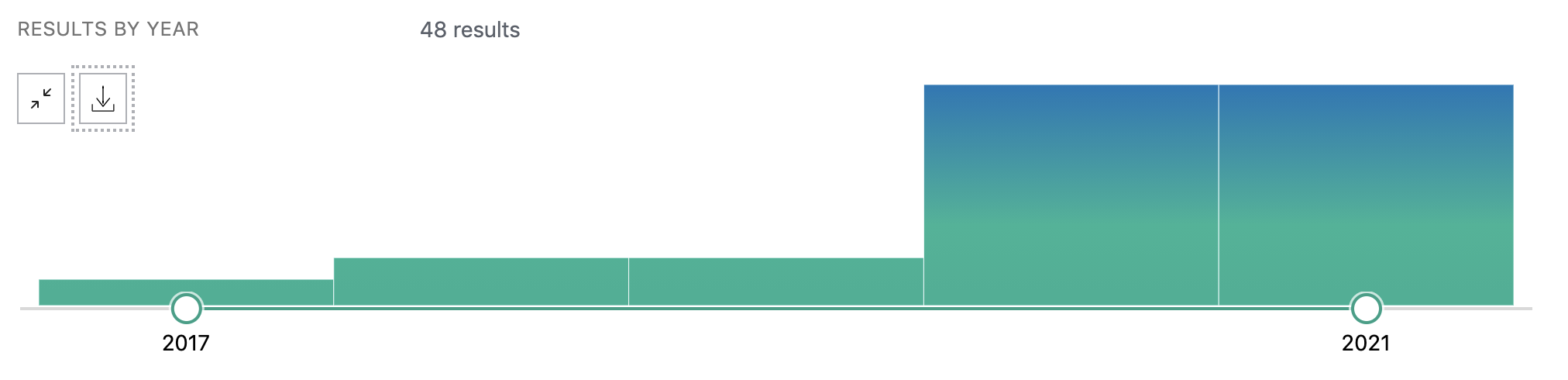

There is a growing number of PubMed publications citing Nextflow.

A curated list of Nextflow pipelines.

Many pipelines written collaboratively are provided by the NF-core project.

Some pipelines written in Nextflow have been used for the SARS-Cov-2 analysis, for example:

The artic Network pipeline ncov2019-artic-nf.

The CRG / EGA viral Beacon pipeline Master of Pores.

The nf-core pipeline viralrecon.

Main advantages

Fast prototyping

You can quickly write a small pipeline that can be expanded incrementally. Each task is independent and can be easily added to other. You can reuse scripts without re-writing or adapting them.

Reproducibility

Nextflow supports Docker and Singularity containers technology. Their use will make the pipelines reproducible in any Unix environment. Nextflow is integrated with GitHub code sharing platform, so you can call directly a specific version of a pipeline from a repository, download and use it on-the-fly.

Portability

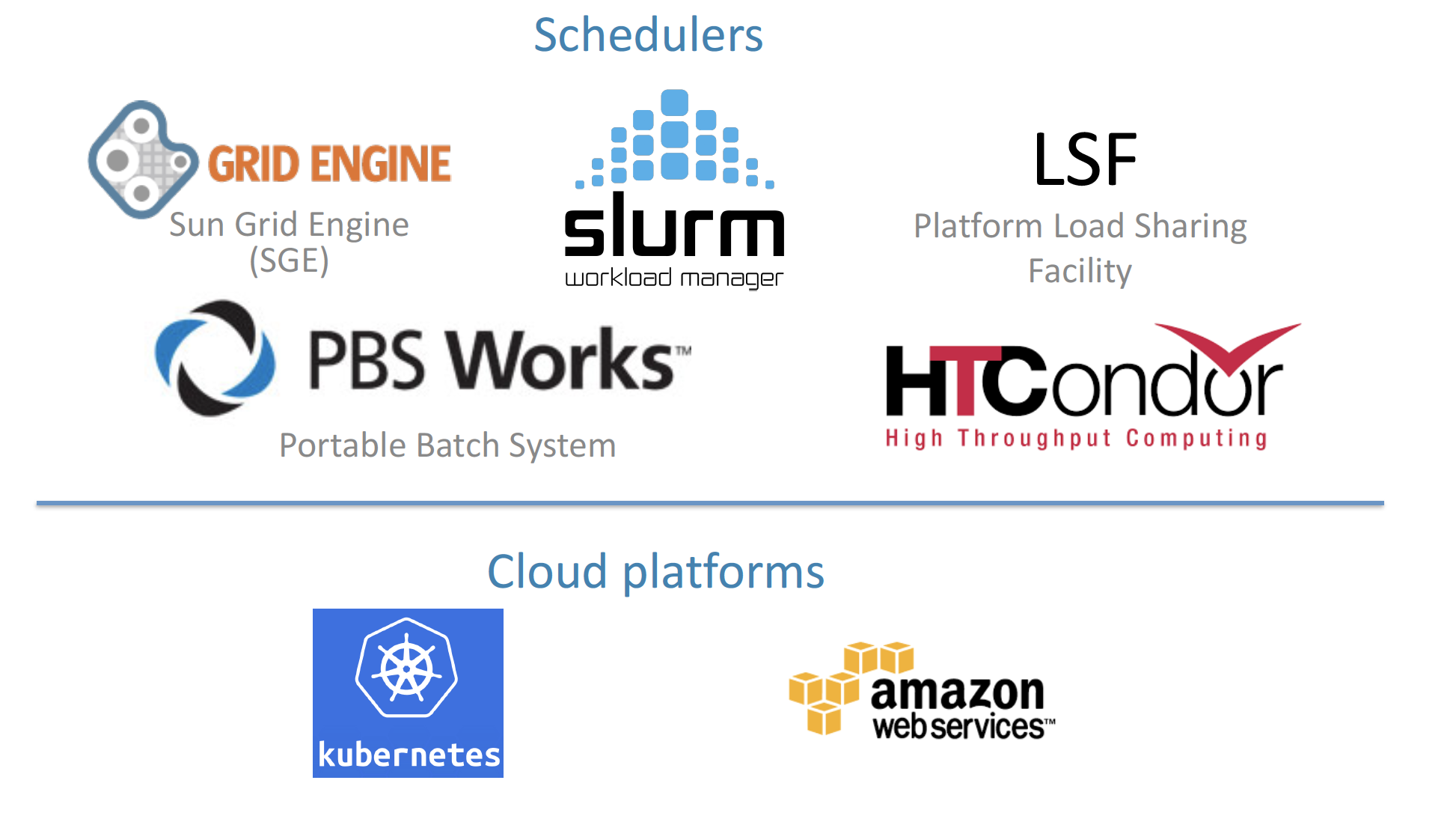

Nextflow can be executed on multiple platforms without modifiying the code. It supports several schedulers such as SGE, LSF, SLURM, PBS, HTCondor and cloud platforms like Kubernetes, Amazon AWS, Google Cloud.

Scalability

Nextflow is based on the dataflow programming model which simplifies writing complex pipelines. The tool takes care of parallelizing the processes without additionally written code. The resulting applications are inherently parallel and can scale-up or scale-out transparently; there is no need to adapt them to a specific platform architecture.

Resumable, thanks to continuous checkpoints

All the intermediate results produced during the pipeline execution are automatically tracked. For each process a temporary folder is created and is cached (or not) once resuming an execution.

Workflow structure

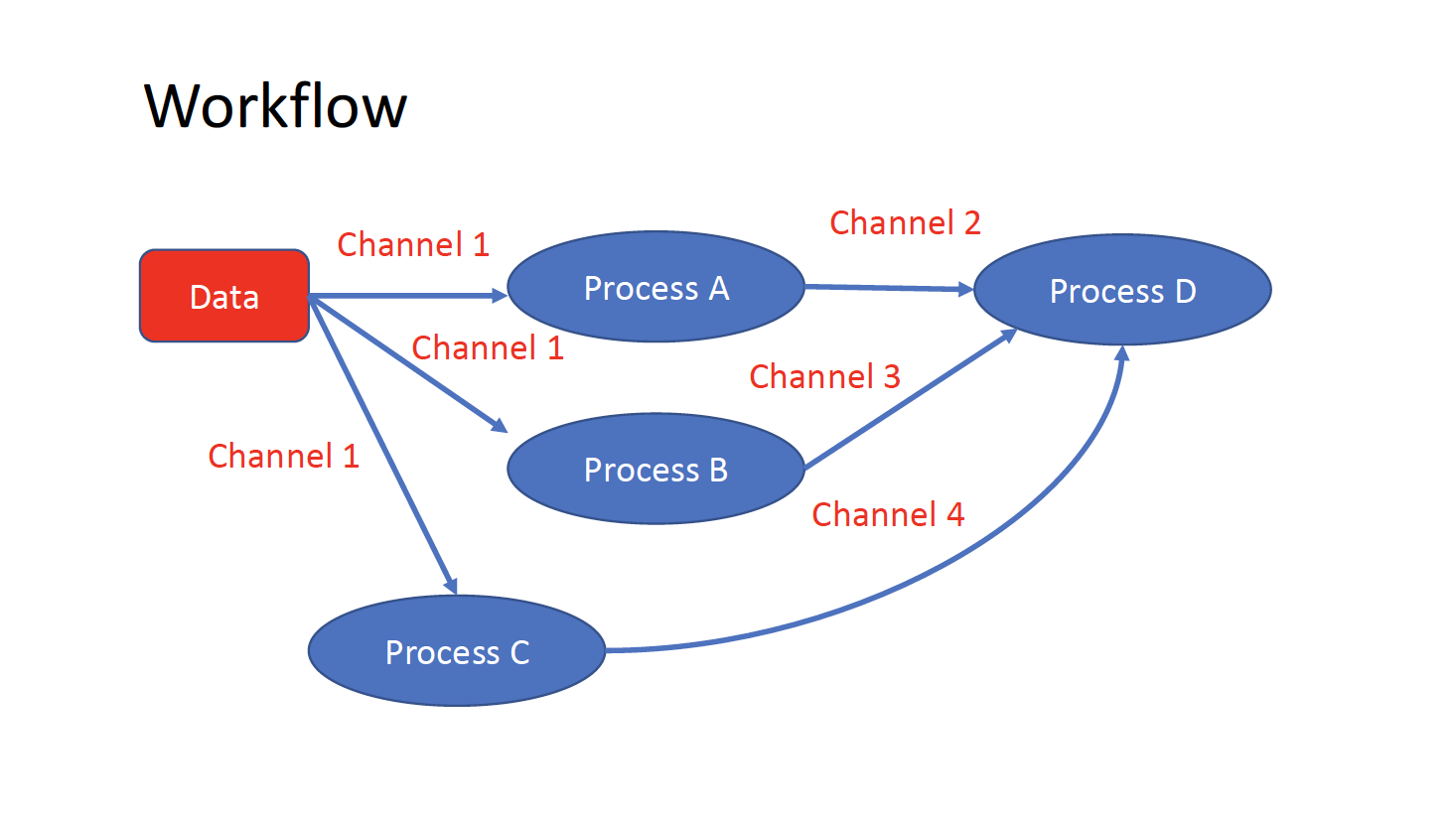

The workflows can be represented as graphs where the nodes are the processes and the edges are the channels. The processes are blocks of code that can be executed - such as scripts or programs - while the channels are asynchronous queues able to connect processes among them via input / output.

Processes are independent from each another and can be run in parallel, depending on the number of elements in a channel. In the previous example, processes A, B and C can be run in parallel and only when they ALL end the process D is triggered.

Installation

Note

Nextflow is already installed on the machines provided for this course. You need at least the Java version 8 for the Nextflow installation.

Tip

You can check the version fo java by typing:

java -version

Then we can install Nextflow with:

curl -s https://get.nextflow.io | bash

This will create the nextflow executable that can be moved, for example, to /usr/local/bin.

We can test that the installation was successful with:

nextflow run hello

N E X T F L O W ~ version 20.07.1

Pulling nextflow-io/hello ...

downloaded from https://github.com/nextflow-io/hello.git

Launching `nextflow-io/hello` [peaceful_brahmagupta] - revision: 96eb04d6a4 [master]

executor > local (4)

[d7/d053b5] process > sayHello (4) [100%] 4 of 4 ✔

Ciao world!

Bonjour world!

Hello world!

Hola world!

This command downloads and runs the pipeline hello.

We can now launch a test pipeline:

nextflow run nextflow-io/rnaseq-nf -with-singularity

This command will automatically pull the pipeline and the required test data from the github repository

The command -with-singularity will automatically trigger the download of the image nextflow/rnatoy:1.3 from DockerHub and convert it on-the-fly into a singularity image that will be used for running each step of the pipeline.

The pipeline can also recognize the queue system which is used on the machine where it is launched. In the following examples, I launched the same pipeline both on the CRG high performance computing (HPC) cluster and on my MacBook:

The result from CRG HPC:

nextflow run nextflow-io/rnaseq-nf -with-singularity

N E X T F L O W ~ version 21.04.3

Pulling nextflow-io/rnaseq-nf ...

downloaded from https://github.com/nextflow-io/rnaseq-nf.git

Launching `nextflow-io/rnaseq-nf` [serene_wing] - revision: 83bdb3199b [master]

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: /users/bi/lcozzuto/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_1_48850000_49020000.Ggal71.500bpflank.fa

reads : /users/bi/lcozzuto/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/*_{1,2}.fq

outdir : results

[- ] process > RNASEQ:INDEX -

[- ] process > RNASEQ:FASTQC -

executor > crg (6)

[cc/dd76f0] process > RNASEQ:INDEX (ggal_1_48850000_49020000) [100%] 1 of 1 ✔

[7d/7a96f2] process > RNASEQ:FASTQC (FASTQC on ggal_liver) [100%] 2 of 2 ✔

[ab/ac8558] process > RNASEQ:QUANT (ggal_gut) [100%] 2 of 2 ✔

[a0/452d3f] process > MULTIQC [100%] 1 of 1 ✔

Pulling Singularity image docker://quay.io/nextflow/rnaseq-nf:v1.0 [cache /nfs/users2/bi/lcozzuto/aaa/work/singularity/quay.io-nextflow-rnaseq-nf-v1.0.img]

WARN: Singularity cache directory has not been defined -- Remote image will be stored in the path: /nfs/users2/bi/lcozzuto/aaa/work/singularity -- Use env variable NXF_SINGULARITY_CACHEDIR to specify a different location

Done! Open the following report in your browser --> results/multiqc_report.html

Completed at: 01-Oct-2021 12:01:50

Duration : 3m 57s

CPU hours : (a few seconds)

Succeeded : 6

The result from my MacBook:

nextflow run nextflow-io/rnaseq-nf -with-docker

N E X T F L O W ~ version 21.04.3

Launching `nextflow-io/rnaseq-nf` [happy_torvalds] - revision: 83bdb3199b [master]

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: /Users/lcozzuto/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_1_48850000_49020000.Ggal71.500bpflank.fa

reads : /Users/lcozzuto/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/*_{1,2}.fq

outdir : results

executor > local (6)

[37/933971] process > RNASEQ:INDEX (ggal_1_48850000_49020000) [100%] 1 of 1 ✔

[fe/b06693] process > RNASEQ:FASTQC (FASTQC on ggal_gut) [100%] 2 of 2 ✔

[73/84b898] process > RNASEQ:QUANT (ggal_gut) [100%] 2 of 2 ✔

[f2/917905] process > MULTIQC [100%] 1 of 1 ✔

Done! Open the following report in your browser --> results/multiqc_report.html